Introduction

In the previous chapters we have discussed about programming basics in Python and the role of Mathematics in indulging Artificial Intelligence to software development. Both concepts are interrelated and form the core of building inner AI theories like Machine Learning and Data Science.

Machine Learning, a subbranch of the broad Artificial Intelligence bracket, is a computer science field that generates the ability to learn within software systems. The aim of this learning is to improve existing data quality from the experience of learning, without having to be explicitly told to generate a specific output. The emphasis of machine learning is to help computer systems learn from the provided input without human interference.

Now, to implement this form of learning like the human brain, computer systems also need information. To provide this data to computers we go through a phase that is called Data Observation or Data Preparation. During this time, the computer system is exposed to tremendous amounts of data that aid its learning process. Experience with this input data gives the machine an understanding of what patterns to study and the end goal of the provided information. This in-turn helps in generating the required analysis and forecasting as output.

Machine Learning and its Varied Forms

Algorithms in Machine Learning are segregated based on the type of input they receive and the computations they do to learn from it. We will soon cover the mathematics and detailed implementation of these algorithms, but for now let us look at the broad classification of these algorithms.

1/3-1-(2).png.aspx;)

Machine Learning is broadly classified into three categories that is Supervised, Unsupervised and Reinforcement Learning. The above image shows differentiation between these algorithms and their use cases.

Supervised Machine Learning

Algorithms that work on labelled data are called supervised learning algorithms. These are the most used mechanisms for training and working on data. Labelled data means data that has questions and answers both present. The algorithm looks at all the various input and expected output and learns what kinds of input map to what form of output. Based on this learning, when new data is provided to this algorithm, it can predict the output.

To get the understanding of how supervised learning functions work, presume we have an input variable x and an output variable y. A function is needed to map the input and the output.

The function Y=f(x) is used to learn the mapping between the input and output

Now, the central objective is to estimate this plotting function in a manner that, whenever new input data (x) is presented to the expression, the algorithms predict the output (Y) from this input.

Primarily supervised leaning problems can be divided into the following two kinds of problems:

- Classification: A categorical variable solution is called classification. Categorical variables include outputs like color, gender, etc. that can have a specified set of values and the algorithm needs to choose from this set. We will be covering Classification in detail in this chapter.

- Regression: A regression is a problem that has a definite solution to predict. For instance, the weight of a car, or the height of a tree. These outputs are definite values. Predicting these is a form of regression.

Some algorithms that are generally used in supervised learning tasks are Decision Trees, Random Forests, Nearest Neighbor Solutions (KNN), Logistic Regression.

Unsupervised Machine Learning

The key difference between supervised and unsupervised algorithms is that unsupervised algorithms are not provided with labelled data. That means, the expected output of the logic is not known to the algorithm during its training process. Therefore, unsupervised learning algorithms are what people generally associate with Artificial Intelligence. It can be understood as follows: These algorithms do not necessarily have a correct or incorrect answer. They only help in understanding theories, patterns of information and help discover interesting forms in data.

Unsupervised Machine Learning algorithms can be apportioned into the following:

- Clustering: Problems associated with clustering help in discovering groupings within data. These groupings of data are generally inherently present in the input data. For example, grouping soccer players based on their attributes of scoring goals. An example of this algorithm is K-Means Clustering.

- Association: A solution is called association when the algorithm tries to discover the rules that connect portions of the input data. For instance, finding common traits in players who score goals as well as give assists. An example is Apriori algorithm.

Reinforcement Machine Learning

The use of reinforcement learning algorithms is comparatively less to the above two (supervised and unsupervised). These procedures train the learning algorithms to make decisions. The basis of this decision making is the exposure this algorithm gets to environment. It is expected to learn from these surroundings and understand the behavior of objects in this situation using a trial-and-error mechanism. Since the algorithm is based on learning from its own experience, decisions tend to be more accurate if the algorithm is built properly and go drastically wrong otherwise. This extreme nature of output possibility makes the use of this algorithm less popular. Markov Decision Process is an illustration of a reinforcement learning algorithm.

Classification and Clustering of Data

Classification is a type of supervised learning and Clustering is used in unsupervised learning algorithms. Both algorithms work on the logic of grouping similar data points together. The difference lies in the fact that classification happens when data is labelled, and output is known whereas clustering occurs on unlabeled data. Classification stipulates the class onto which data elements belong and predicts a class for a finite input variable. Clustering on the other hand, can work on infinite set of data points as well.

There are 2 types of Classification:

- Binomial: The output is aimed to be grouped and classified into one of two possible categories.

- Multi-Class: The idea is to group an outcome into one of multiple groups.

Use cases of Classification:

- Finding out whether a received email is spam based on the history of spam messages received.

- Identify segments of shopping customers based on their purchase patterns.

- To understand if a customer will repay his loan based on his credit history.

- Predicting whether a kid will pass a given examination based on the analysis of questions and the student’s academic background.

Clustering or Cluster Analysis

Cluster Analysis is an unsupervised learning mechanism that helps in determining interesting patterns in data. There is generally no correct algorithm to use and it is always considered a good practice to explore a range of algorithms with varied combinations to conclude. A cluster is often an area of concentrated density in the given input space.

Use cases of Clustering:

- Identifying fake news and sensationalized or click-bait articles.

- Personalized advertisement targeting for customers based on their search patters.

- Understanding the broad area of network traffic on a page.

- Analysis of fantasy football and recommendation of players for next week’s team.

Understanding Classifiers in Python

To build classifiers in Python, we will use the scikit-learn library that is used for Machine Learning. Here are some important functions and methods we need to understand before moving onto building a classifier.

Scikit-Learn: The scikit learn library is probably the most comprehensive toolkit for machine learning in Python. It contains methods for training learning models, performing statistical analysis, and visualizing data. We will be using scikit learn throughout this course for implementing various machine learning and artificial intelligence algorithms.

Importing Datasets from Scikit-Learn: The scikit learn library has various inbuilt datasets meant for practicing machine learning problems. These datasets are open-sourced and available for using in locally running algorithms. We will be using import statements to fetch data from some of these datasets.

Train-Test-Split: : This method is commonly used to split arrays or matrices into arbitrary training and testing groups. The splitting can be explicitly mentioned as an argument of the function while calling it. This split makes sure that all the present data is not used during training, to avoid situations of overfitting and underfitting the model.

# The Train Test and Split Method Definition sklearn.model_selection.train_test_split(*arrays, test_size=None, train_size=None, random_state=None, shuffle=True, stratify=None)

# test_size: Value should be between 0.0 and 1.0 and signifies the percent of data we are putting under test data

# train_size: Like test size for training data divison

# Implementing it using a numpy array

import numpy as np

from sklearn.model_selection import train_test_split

X, y = np.arange(10).reshape((5, 2)), range(5)

X

>>> array([[2,3], [0,1], [4, 5], [8,9], [6,7]])

list(y)

>>> [0, 1, 2, 3, 4]

# Doing a train test and split on the data with a test size of 43% of input data

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.43)

# Check all the 4 individual arrays to see that the input has been split

X_train

>>> array([[0, 1], [6, 7], [4, 5]])

y_train

>>> [0, 3, 2]

X_test

>>> array([[8,9], [2,3]])

y_test

>>> [1, 4]

# This process helps in avoiding underfitting and overfitting problems with data

We will be using concepts revolving around sci-kit learn, NumPy, pandas, matplotlib and seaborn in the remainder of the course to get hold of data preparation, cleaning, visualization, and training. These libraries combined make Python one of the strongest languages for solving data science problems. Let us get started with our first machine learning example in the following section.

The Naive-Bayes Algorithm

1/3-2.png.aspx;)

Representation of how Naïve Bayes algorithms work. Generally, all other classification and clustering models work on the same principles of grouping data. Only their inline mathematical operations are different.

# Importing the Sci-kit learn library and importing Wisconsin breast cancer dataset

import sklearn

from sklearn.datasets import load_breast_cancer

data_set = load_breast_cancer()

# Differentiating features and labels on the imported data set

label_names = data_set['target_names']

labels = data_set['target']

feature_names = data_set['feature_names']

features = data_set['data']

# We observe the that are two categories of cancer that need to be labelled (Output Parameters)

print(label_names)

>>> ['malignant' 'benign']

# Printing the raw data from the data-set and using .DESCR function to retrieve the descriptions of columns present in the data

print(data_set.data)

>>>

[1.799e+01 1.038e+01 1.228e+02 ... 2.654e-01 4.601e-01 1.189e-01]

[2.057e+01 1.777e+01 1.329e+02 ... 1.860e-01 2.750e-01 8.902e-02]

[1.969e+01 2.125e+01 1.300e+02 ... 2.430e-01 3.613e-01 8.758e-02]

...

[1.660e+01 2.808e+01 1.083e+02 ... 1.418e-01 2.218e-01 7.820e-02]

[2.060e+01 2.933e+01 1.401e+02 ... 2.650e-01 4.087e-01 1.240e-01]

[7.760e+00 2.454e+01 4.792e+01 ... 0.000e+00 2.871e-01 7.039e-02]]

…. Output Truncated

print(data_set.DESCR)

>>>

Breast cancer wisconsin (diagnostic) dataset

--------------------------------------------

**Data Set Characteristics:**

:Number of Instances: 569

:Number of Attributes: 30 numeric, predictive attributes and the class

:Attribute Information:

- radius (mean of distances from center to points on the perimeter)

- texture (standard deviation of gray-scale values)

- perimeter

- area

- smoothness (local variation in radius lengths)

- compactness (perimeter^2 / area - 1.0)

- concavity (severity of concave portions of the contour)

- concave points (number of concave portions of the contour)

- symmetry

- fractal dimension ("coastline approximation" - 1)

….

…..Output Truncated

# Import pandas to use data frames for exploration

# Read the DataFrame, using the feature names from the data set

import pandas as pd

df = pd.DataFrame(data_set.data, columns=data_set.feature_names)

# Add a column to generate the ouput

df['target'] = data_set.target

# Use the info() method from Pandas to see some informations about attributes

df.info()

>>>

RangeIndex: 569 entries, 0 to 568

Data columns (total 31 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 mean radius 569 non-null float64

1 mean texture 569 non-null float64

2 mean perimeter 569 non-null float64

3 mean area 569 non-null float64

4 mean smoothness 569 non-null float64

5 mean compactness 569 non-null float64

6 mean concavity 569 non-null float64

7 mean concave points 569 non-null float64

8 mean symmetry 569 non-null float64

9 mean fractal dimension 569 non-null float64

10 radius error 569 non-null float64

11 texture error 569 non-null float64

12 perimeter error 569 non-null float64

13 area error 569 non-null float64

# Use the head() method from Pandas to see the top of the data set

df.head()

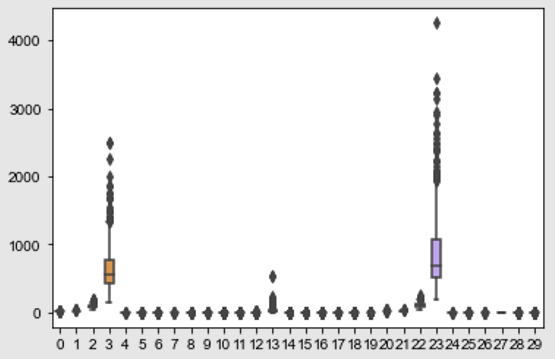

# Import the seaborn library to visualize the input data

# We will form a box plot to understand the spread of the data set in comparison to the target column

import seaborn as sns

box_plot = data_set.data

box_target = data_set.target

sns.boxplot(data = box_plot,width=0.6,fliersize=7)

sns.set(rc={'figure.figsize':(2,15)})

>>>

# We will now move towards applying Machine Learning to this data

# Currently we know the target variables, and we have labelled input data as well.

# Use the train_test_split to create the training and testing data sets

from sklearn.model_selection import train_test_split

train, test, train_labels, test_labels = train_test_split(features,labels,test_size = 0.33, random_state = 40)

# Import the Naïve Bayes Classifier from Sci-kit learn’s library

from sklearn.naive_bayes import GaussianNB

g_nb = GaussianNB()

# The fit method is used to train the model using the training data

model = g_nb.fit(train, train_labels)

# The predict method runs the learned model on the test data to generate the output

predicts = g_nb.predict(test)

print(predicts)

>>> [1 0 1 1 0 1 1 1 0 1 0 1 1 1 0 1 1 1 1 1 1 0 1 0 0 0 0 0 1 1 1 0 0 1 1 1 1

0 1 1 0 1 1 1 0 0 1 1 0 1 0 1 1 1 1 1 0 0 0 1 1 0 1 1 1 0 0 0 1 1 1 0 0 1

0 0 0 1 1 1 1 1 0 1 0 1 0 0 1 0 1 1 1 1 1 0 1 1 1 1 1 1 1 0 1 1 1 0 1 1 1

1 0 1 0 1 1 1 1 0 0 1 1 1 1 0 0 1 1 1 1 1 1 1 1 1 1 1 0 1 0 1 1 0 1 1 1 1

1 0 1 0 1 0 1 1 0 1 0 1 0 1 1 1 1 1 1 1 0 1 0 0 1 0 1 0 1 1 1 1 1 1 1 1 1

1 1 0]

# We get an output that predicts the type of cancer ('malignant' or 'benign') caused from the given inputs

# Every time an algorithm is created and run, use the accuracy_score to check the working of that algorithm

from sklearn.metrics import accuracy_score

print(accuracy_score(test_labels,preds))

>>> 0.9680851063829787

# This algorithm model has nearly a 97% accuracy

This is a basic example of implementing the Naïve Bayes classifier using Python. We will be looking into feature engineering and selection of elements for bettering the preprocessing of data in the next chapter.

This chapter continues in the following part two.